How did CHIPRA quality demonstration States employ learning collaboratives to improve children’s health care quality?

Evaluation Highlight No. 13

Authors: Rebecca Peters, Rachel Burton, and Kelly Devers

Contents

|

The CHIPRA Quality Demonstration Grant Program In February 2010, the Centers for Medicare & Medicaid Services (CMS) awarded 10 grants, funding 18 States, to improve the quality of health care for children enrolled in Medicaid and the Children's Health Insurance Program (CHIP). Funded by the Children's Health Insurance Program Reauthorization Act of 2009 (CHIPRA), the Quality Demonstration Grant Program aims to identify effective, replicable strategies for enhancing quality of health care for children. With funding from CMS, the Agency for Healthcare Research and Quality (AHRQ) is leading the national evaluation of these demonstrations. The 18 demonstration States are implementing 52 projects in five general categories:

|

This Evaluation Highlight is the 13th in a series that presents descriptive and analytic findings from the national evaluation of the Children’s Health Insurance Program Reauthorization Act of 2009 (CHIPRA) Quality Demonstration Grant Program. This Highlight focuses on lessons learned from nine States—Alaska, Florida, Idaho, Maine, Massachusetts, North Carolina, Oregon, Utah, and West Virginia. These States implemented learning collaboratives and subsequently reported quantifiable improvements in medical home capacity and/or health care quality among the 137 child-serving primary care practices that participated in the CHIPRA quality demonstration. The analysis is based on work completed by States during the first 4.5 years of their 5-year demonstration projects.

Key Messages

- States used different incentives to encourage practice participation in learning collaboratives. The incentives included stipends, maintenance-of-certification (MOC) credits, and the alignment of learning collaborative content with external financial incentives.

- Practices appreciated a combination of traditional didactic instruction and interactive learning activities such as competitions and live demonstrations. Participants particularly appreciated learning from their peers about how they implemented new care processes, the barriers they faced in integrating new processes, and how they overcame such barriers.

- Practices valued learning about the evidence base supporting a change as well as practical information, such as sample patient screening questionnaires and information on which Medicaid billing code to use for a particular service.

- To support practices’ medical home transformation and/or delivery of new preventive services, States supplemented expert-led meetings and Webinars with individualized practice facilitation and comparative, practice-level quality measure data. States and practices found that practice facilitators needed to undergo quality improvement (QI) training and work with a small enough number of practices to allow them to provide meaningful individualized support.

- At the conclusion of learning collaborative activities, practices in all nine States featured in this Highlight reported an increase in medical home capabilities and/or performance on clinical quality measures.

Background

The CHIPRA statute and other major reforms, including the Affordable Care Act (ACA), have increased the Nation’s focus on the need to improve health care quality. Learning collaboratives (sometimes called QI collaboratives) represent one approach available to help practices improve health care quality.1 This group-learning approach is typically characterized by (1) a focus on a specific topic (often one in which current practice lags behind the available evidence base); (2) the delivery of content by clinical and QI experts; (3) multidisciplinary teams sharing knowledge and learning across several participating organizations; (4) structured in-person and virtual learning activities that include meetings, Webinars, and conference calls; and (5) the use of an improvement model based on setting targets, collecting data, and testing changes on a small scale.2

Several disciplines use learning collaboratives, which have become increasingly common in health care settings. Evidence of collaboratives’ ability to help practices improve health outcomes has been mixed,3, 4 though some evidence points to positive impacts on certain clinical quality measures for pediatric populations.5-7

This Highlight adds to the evidence base by describing the experiences of nine CHIPRA quality demonstration States that are using learning collaboratives to improve medical home capacity and/or performance on clinical quality measures.8 (Details on each State’s collaborative are available in the Supplement to this Highlight.) Through interviews with collaborative staff and participating practices (including federally qualified health centers and pediatric and family practices), we have identified strategies that worked well and those that were less successful. We also report on quantitative improvements realized by practices by mid-2014 based on self-reported medical home practice surveys or clinical quality measures.

The information in this Highlight is based on in-person and telephone interviews conducted by the national evaluation team in spring and summer 2014 with State demonstration staff, participating practices in each of the nine States, and other key stakeholders. The evaluation team’s findings also reflect information from the States’ semi-annual progress reports and other documents provided by the States to illustrate quantifiable improvements in medical home capabilities and/or quality metrics.

Findings

State and practice staff reported that a combination of strategies described below helped the practices increase their medical home capacity and performance on clinical quality measures.

Learning collaboratives taught how to test improvements and measure progress

Learning collaboratives in the nine States convened a subset of child-serving primary care practices—ranging from 3 federally-qualified health centers in Alaska to 34 practices that participated in one round of Maine’s learning collaborative—to learn with and from each other in expert-led meetings. Learning collaboratives covered several topics—from how to strengthen practices’ QI efforts to how to expand their medical home capacity and/or improve the delivery rates of particular preventive services. Many practices learned how to conduct small tests of change, often using the Plan-Do-Study-Act (PDSA) approach.9 The PDSA approach involves assessing whether a small change has a positive impact and then continuing torefine and test the change until it is successfully incorporated into a practice.

Learning collaboratives also helped practices gain skills in how to collect data and calculate quality measures for small samples of patients. Learning collaborative staff then provided practices with comparative data showing how they ranked relative to their peers and how their performance had changed over time. The first Highlight in this series describes in greater detail how four States collected and used practice-level quality measurement data.10

States used incentives to encourage participation in collaboratives

Stipends. Four States (Alaska, Massachusetts, Oregon and North Carolina) reported using financial stipends to help defray the cost of lost revenue incurred when practice staff attended collaborative meetings instead of seeing patients. Stipends ranged from as much as $18,000 per year in one State to a few thousand dollars in others. Even when stipends did not fully cover the cost of lost productivity, practices valued the States’ contributions and support for practices’ investment in quality improvement.

Continuing education credits. Many CHIPRA quality demonstration States partnered with State chapters of professional societies, such as the American Academy of Pediatrics (AAP), to provide free or discounted continuing medical education (CME) credits or maintenance of certification (MOC) credits. Physicians need CME and MOC credits to maintain their licensure and board certification, respectively. MOC credits are particularly valuable because fulfilling them requires participating in educational activities, performing QI activities, and submitting quality measure data.

|

“[Offering maintenance of certification] has been critical. It’s a nice carrot to offer providers, and we’ve had a ton of interest and provided a lot of MOC credit. It [gives] providers that incentive to stay involved.” —Idaho Demonstration Staff, July 2014 |

Aligning clinical topics with external reimbursement. Some States focused the content of learning collaboratives on clinical topics that aligned with financial incentives available to practices from public or private payers or health care systems. These external incentives included enhanced payments for adopting the patient-centered medical home (PCMH) model of care (Oregon and Vermont) and bonuses for achieving performance targets on certain quality measures that some health care systems and payers use for financial incentives (Maine).

Medicaid billing codes. As part of their learning collaboratives, some States sought to increase the delivery of certain preventive services, including screening for conditions such as developmental delays or adolescent depression. However, many providers’ perception that they could not get reimbursed for these screenings was a major barrier. States found that identifying billing codes providers could use persuaded some to deliver preventive services. For example, leaders of North Carolina’s learning collaborative developed a simple, one-page document that described the autism screening questionnaire to be used, the Medicaid billing code for that service, and strategies for overcoming common barriers to using the screening tool. The State developed similar “one-pagers” for a variety of services, and practices appreciated having access to this practical information.11

In Maine, when no billing code was available to reimburse practices for providing oral health risk assessments for children from birth to 3 years, State demonstration staff first worked with the State’s Medicaid agency to create a new billing code. The State demonstration staff then communicated the code to practices participating in the State’s learning collaborative and later publicized the codes Statewide in an effort to improve oral health screening rates.

Learning collaboratives used various strategies to keep practices engaged

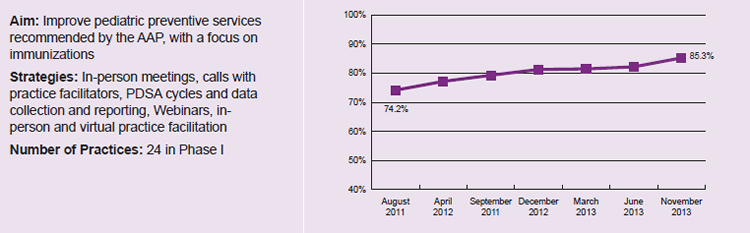

Combining didactic and interactive instruction. Learning collaborative leaders and participants in all nine States reported that a combination of didactic and interactive instruction was ideal. Several States supplemented lectures with role-playing and competitive team games. For example, in Maine, one learning collaborative session featured a game show–like competition in which practices teamed up against each other to answer questions about immunization guidelines. The practices found the game more engaging, and the content more accessible, than a traditional lecture. This strategy was one of several used by Maine that ultimately led to an increase of 11.1 percentage points in the proportion of patients receiving recommended immunizations (Figure 1).

Figure 1. Increased immunization rates reported by Maine for learning collaborative practices12

Note: Data reported by CHIPRA quality demonstration staff in Maine and not independently validated by national evaluation team. Increase in reported immunization rates is statistically significant (p<0.05).

Peer networking. Many States found that opportunities for peer networking and informal discussions about different approaches to delivering care were a major draw for learning collaborative meetings. When several States (including Alaska, Massachusetts, and West Virginia) learned that practices appreciated interacting with and learning from other practices, they reduced the amount of traditional didactic instruction to allow more time for peer-to-peer learning. The learning collaboratives provided practices with time to network with each other, including during lunch and between sessions at in-person or virtual meetings. In addition, practices in some States shared knowledge with each other by making presentations on how they adopted new care processes.

| “I think [practices learning together] is a terrific concept…. When a group of offices get together, or a group of doctors and nurses and support staff get together, and start thinking of ways to practice better, [it] is a terrific synergy.”

—Florida Practice Staff, May 2014 |

Tailoring collaboratives to practices’ needs. To make sure that collaboratives were meeting practices’ needs over the course of the demonstration, many States solicited frequent feedback from the practices and made mid-course adjustments to collaboratives’ structure and content. For example, learning collaborative leaders in West Virginia realized that practice facilitation had to take place at practices’ offices to reach the entire team. On-site meetings with the teams replaced virtual practice calls to deliver content, solicit feedback, and assist with data reporting issues. By collecting feedback, States identified instructional approaches that worked well for participants or adjusted other aspects of their collaboratives.

Enlisting physician leaders as faculty. State staff in several States emphasized the importance of relying on a reputable physician to lead their learning collaboratives. State and practice staff perceived that physicians with State leadership credentials (such as leaders of State AAP chapters) were particularly successful in leading learning collaboratives. Practice staff especially trusted physicians with substantial clinical and QI experience.

| “Having a physician lead [the learning collaborative] was key. [It was important to] have a credible leader who is a pediatrician from that community who was also part of the Maine American Academy of Pediatrics (AAP) chapter, and to get buy-in from the Maine American Academy of Family Physicians (AAFP).”

—Maine Demonstration Staff, July 2014 |

Demonstrations. States sometimes supplemented instruction with live or prerecorded demonstrations of how to deliver services that participating practices were not accustomed to delivering routinely. For example, a pediatric dentist in Maine instructed learning collaborative meeting participants on how to apply fluoride varnish and then, while still on stage, supervised as a volunteer doctor from the audience applied varnish to a toddler’s teeth. In North Carolina, videos of how to apply dental varnish were shown to staff during practice visits. An in-person session in Utah featured an interactive theater exercise: participants suggested different ways to incorporate mental health screening questions into the adolescent physicals required for student participation on sports teams, and then actors acted out the different approaches.

Practices benefitted from individualized facilitation

All nine States used practice facilitators in some capacity. Some States did not use practice facilitators at the beginning of the CHIPRA quality demonstration but then involved them later in the demonstration in response to an identified need. For example, Alaska hired practice facilitators in January 2014 to leverage an emerging opportunity in the State to pair local medical home experts with practices to assist with their transformation.

Facilitators in all nine States visited practices, were available by telephone, and provided practices with customized facilitation and technical assistance. They also kept practices “on task” and provided customized feedback about practice-level QI efforts. State and practice staff highlighted the importance of “a good fit” between facilitators and practice staff. In one State, for example, several practices reported that they faced challenges in developing the same rapport with a new facilitator after the previous facilitator retired.

Facilitators needed strong QI skills and had to be able to spend sufficient time with each practice. For example, in one State, practice staff reported that facilitators who worked with numerous practices were spread too thin and were not sufficiently trained in QI principles. About 3 years into the demonstration, the State hired a QI specialist to train and provide assistance to the practice facilitators. As a result, the facilitators developed a better understanding of QI processes such as PDSA cycles, how to prepare and interpret charts, and how to engage providers in quality measurement.

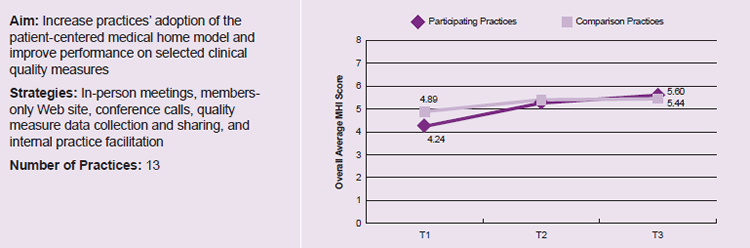

While most States hired facilitators from outside the practice, Massachusetts provided training to one person within each practice in order to build his or her skills in assisting with practice improvement. The hiring of in-house facilitators was one of several strategies used by the State to help practices adopt the PCMH model of care. In Figure 2, we show that, although the practices in Massachusetts’s learning collaborative had less medical home capacity relative to comparison groups when the collaborative began (4.24 on a scale of 0 to 8, compared with 4.89 among comparison practices), they adopted features of a medical home faster than did comparison practices. Moreover, the Massachusetts practices in the learning collaborative ultimately surpassed the comparison practices in their Medical Home Index score by the end of the collaborative (5.60 for participating practices compared with 5.44 for comparison practices).

Figure 2. Increased Medical Home Index scores reported by Massachusetts for learning collaborative and comparison practices13

Note: Data reported by CHIPRA quality demonstration staff in Massachusetts; not independently validated.

Time 1 (T1) is October 2011 (before the start of the learning collaborative) for the participating practices and March 2012 for the comparison practices; Time 2 (T2) is November 2012 for the participating practices and January 2013 for the comparison practices; Time 3 (T3) is November 2013 for both the participating and comparison practices.

Collaboratives helped forge new referral relationships

Many practices across the States reported that they were wary of screening for mental health conditions because they felt unequipped to deliver the needed care themselves and were not aware of nearby specialists. To rectify the situation, the organization leading the learning collaborative in Utah asked local mental health professionals to deliver “elevator speeches” about their services during “speed resourcing” sessions at in-person collaborative meetings. It also arranged for local psychiatrists to engage with practices in the learning collaborative to consult on specific patient cases. The relationship with psychiatrists gave some practices the confidence to screen for mental health conditions, engage in some basic conversations with patients about mental health, and refer appropriate cases to local resources. State staff reported that practices in Utah’s learning collaborative have dramatically increased the rate at which they deliver routine screening for mental health conditions—from 33.6 percent of eligible visits in August 2012 to 90.3 percent in July 201314—in part because of the relationships they forged with mental health care providers.

Similarly, Maine’s learning collaborative developed new referral relationships between participating primary care providers and local pediatric dentists through its Dining with the Dentist program. Over a meal, primary care providers and a local dentist got to know each other and form informal service agreements or compacts; primary care providers identified what type of information dentists want when patients are referred to them, and these dentists learned what type of reports primary care physicians want to receive back from dentists. This networking is also allowing dentists to communicate their interest in seeing pediatric patients earlier than physicians typically refer patients to them, before dental problems develop.

Enlisting family caregivers to provide practices with feedback was valuable but challenging

Family perspectives. Most States found it beneficial to include family caregivers in practices’ QI initiatives, such as through membership on advisory councils and participation in learning collaborative sessions. A caregiver’s perspective could help a practice become aware of opportunities for quality improvement, though States experienced challenges in securing ongoing participation from busy parents. Across States, practice staff reported that soliciting parent or guardian involvement worked best in person versus through email, listservs, or conference calls.

| “Our overall experience with family partners is that sometimes it works really well—because the practices get it and they have a great family partner who is really communicative—and other times, it is really a struggle . . . . [It’s been important to] consult with individual practices and see what they need help with.”

—Utah Demonstration Staff, June 2014 |

Several States created formal mechanisms for including family caregivers in learning collaborative meetings. For example, in Massachusetts, some learning collaborative sessions focused on strategies for engaging family caregivers in a practice’s QI committee or other quality-related activities, and featured caregivers as speakers. Both a State staff person and a provider described the in-person interaction among learning collaborative staff, practices, and family caregivers as an especially successful element of Massachusetts’s learning collaborative. Practices have also begun to see the value of involving family caregivers in refining clinic processes. For example, a practice in Idaho made changes to its intake packets and appointment reminder letters in response to caregiver comments on the type of information they wanted to receive about followup appointments.

Although several States spoke very positively of the value of involving family caregivers in learning collaboratives or on practices’ QI teams, staff in some States expressed concern that caregivers’ presence might discourage providers from being completely transparent about the various challenges they face in quality improvement.

States reported positive outcomes from their learning collaboratives

At the conclusion of their learning collaboratives, the nine States featured in this Highlight reported quantifiable gains in at least one outcome. The gains typically took the form of an increase in (1) the practices’ scores on self-reported surveys that measure the extent to which a practice has adopted the medical home model of care (true for the three partner states in Figure 3, which saw significant increases in participating practices’ National Committee for Quality Assurance [NCQA] PCMH scores) or (2) the delivery rate of preventive services, such as screening for mental health conditions or administering age-appropriate immunizations. Results such as those presented in Figures 1, 2, and 3 suggest that learning collaboratives can support practice improvement that leads to better outcomes.

Figure 3. Increased NCQA Patient-Centered Medical Home scores reported by three partner States (Alaska, Oregon, and West Virginia) for learning collaborative practices15

Note: Data reported by CHIPRA quality demonstration staff in Oregon, Alaska, and West Virginia and not independently validated by national evaluation team. Increases in NCQA medical home scores are statistically significant (p<0.05).

Conclusions

The States included in this Highlight reported measurable improvements in medical home capabilities and/or quality measure performance for practices participating in their CHIPRA learning collaboratives. States faced a learning curve implementing the collaboratives and often refined the strategies they used in response to formal and informal feedback collected from participating practices. Even though the nine CHIPRA quality demonstration States structured their learning collaboratives differently, the collaboratives shared important characteristics and experiences that point to several promising practices.

State and practice staff found that learning collaboratives can be highly successful in encouraging practice participation by offering stipends and MOC credits and by aligning with external financial incentives available in their respective States. Learning collaborative leaders also found that they could increase practice engagement by using interactive learning approaches in addition to traditional instruction and by providing ample opportunities for peer-to-peer learning. Practices placed a particularly high value on learning collaboratives led by respected physicians and found practical information (such as one-page documents with billing codes) especially helpful. Practices discovered that family partners brought insightful and helpful perspectives but also encountered challenges in maintaining their participation. Practice facilitation is also useful for keeping practices “on task” and providing customized feedback. States learned that practice facilitation is most successful when facilitators undergo training in QI methods, demonstrate relevant clinical knowledge, and work with a limited number of practices to allow for the development of personal relationships between facilitators and practices.

Implications

States interested in using learning collaboratives to help practices improve their medical home capacity and/or their performance on clinical quality measures may want to consider the following lessons learned by the nine CHIPRA quality demonstration States featured in this Highlight:

- Align learning collaborative content with external reimbursement opportunities (such as pay-for-performance initiatives) and offer MOC credits or stipends to encourage practice participation.

- Help practices learn to incorporate new care processes using small tests of change that are iteratively assessed and modified (such as by using the PDSA approach).

- Offer comparative quality measure data that show how practices compare to their learning collaborative peers to motivate QI efforts.

- Hire and train practice facilitators to provide individualized support to practices.

- Work with local physician leaders to develop and deliver learning collaborative sessions.

- Combine formal didactic learning with interactive and informal approaches to keep practices engaged and help them build connections with other practices.

- Provide the rationale for delivering a particular service as well as practical information, such as recommended patient screening questionnaires, Medicaid billing codes, and live or prerecorded demonstrations of how to deliver a service.

Solicit regular feedback from practices about the learning collaborative structure and content and make timely mid-course adjustments to meet practices’ needs.

AcknowledgmentsThe national evaluation of the CHIPRA Quality Demonstration Grant Program and the Evaluation Highlights are supported by a contract (HHSA29020090002191) from the Agency for Healthcare Research and Quality (AHRQ) to Mathematica Policy Research and its partners, the Urban Institute and AcademyHealth. Special thanks are due to Cindy Brach and Linda Bergofsky (AHRQ), Elizabeth Hill and Barbara Dailey (CMS), Dana Petersen, Joe Zickafoose, and Daryl Martin (Mathematica), and Ellen Albritton (AcademyHealth), as well as CHIPRA quality demonstration staff in the States featured in this Highlight for their careful review and many helpful comments. We also appreciate the time that CHIPRA quality demonstration staff, practice staff, and other stakeholders devoted to answering our interview questions. The observations in this document represent the views of the authors and do not necessarily reflect the opinions or perspectives of any State or Federal agency. |

Learn moreAdditional information about the national evaluation and the CHIPRA quality demonstration is available at http://www.ahrq.gov/policymakers/chipra/demoeval/. Use the tabs and information boxes on the Web page to also:

|

Endnotes

1 Devers K. The State of Quality Improvement Science in Health: What Do We Know about How to Provide Better Care? Princeton, NJ: Robert Wood Johnson Foundation; November 2011.

2 Schouten L, Hulscher M, Everdingen J, et al. Evidence for the impact of quality improvement collaboratives: systematic review. BMJ 2008;336(7659):1491-94.

3 Nadeem E, Olin SS, Hill LC, et al. A literature review of learning collaboratives in mental health care: used but untested. Psychiatr Serv 2014;65(9):1088-99.

4 Nadeem E, Olin S, Hill LC, et al. Understanding the components of quality improvement collaboratives: a systematic literature review. Milbank Q 2013;91(2):354-94.

5 Payne NR, Finkelstein MJ, Liu M, et al. NICU practices and outcomes associated with 9 years of quality improvement collaboratives. Pediatrics 2010;125(3):437-46.

6 Polacsek M, Orr J, Letourneau L, et al. Impact of a primary care intervention on physician practice and patient and family behavior: Keep ME healthy—the Maine Youth Overweight Collaborative. Pediatrics 2009;123(suppl 5):S258-66.

7 Van Cleave J, Kuhlthau K, Bloom S, et al. Interventions to improve screening and follow-up in primary care: a systematic review of the evidence. Acad Pediatr 2012;12(4)269-82.

8 Devers K, Foster L, Brach C. Nine states’ use of collaboratives to improve children’s health care quality in Medicaid and CHIP. Acad Pediatr 2013;13(6S):S95-101.

9 Evaluation Highlight #5 discusses how practices used PDSA cycles to facilitate practice change. Available at: https://www.ahrq.gov/sites/default/files/wysiwyg/policymakers/chipra/demoeval/what-we-learned/highlight05.pdf.

10 Ferry GA, Ireys HT, Foster L, et al. How Are CHIPRA Demonstration States Approaching Practice-Level Quality Measurement and What Are They Learning? Rockville, MD: Agency for Healthcare Research and Quality; 2014. Available at: http://www.ahrq.gov/policymakers/chipra/demoeval/what-we-learned/highlight01.html.

11 A selection of learning collaborative materials, including the “one-pagers” described here, are available on North Carolina’s learning collaborative Web page at: http://www.ncfahp.org/chipra-connect.aspx

12 IHOC First STEPS Phase 1 Initiative: Improving Immunizations for Children and Adolescents—Final Evaluation Report; March 2013. Available at: http://www.maine.gov/dhhs/oms/pdfs_doc/ihoc/first-steps-phase1-eval-report.pdf; Evaluating First STEPS: What have we learned? Harvest Meeting Presentation; Sept. 2014. Available at: http://www.mainequalitycounts.org/image_upload/Harvest%20evaluation%20overview%20091014%20v2.pdf. Figure 1 illustrates the average rate of change for overall immunization rates across 16 of the 24 practices participating in Phase I of Maine’s learning collaborative (8 other practices did not complete pre- and post-surveys). For 2 year olds, this rate includes hepatitis A, hepatitis B, MMR, varicella, polio, DTP, pneumococcal conjugate vaccine, and rotavirus; for 6 year olds, it includes MMR, varicella, polio, and DTP; for 13 year olds, it includes meningococcal vaccine, TD, and HPV (girls only).

13 CHIPRA Quality Demonstration Semi-Annual Progress Report submitted by Massachusetts CHIPRA demonstration staff to CMS, August 2014. Figure 2 illustrates Medical Home Index scores for the 13 CHIPRA practices in Massachusetts compared with Medical Home Index-Revised Short Form scores for comparison practices (8 practices at T1, 9 practices at T2, 10 practices at T3).

14 Unpublished document from Utah Pediatric Partnership to Improve Healthcare Quality (UPIQ). Nae’ole S. “Mental health problems in children, year 2—identification, evaluation and initial management”.

15 Internal report from Tri-State Children’s Health Improvement Consortium (T-CHIC). “Updated Medical Home Office Report Tool (MHORT) Findings for Alaska, Oregon, and West Virginia.”