AcademyHealth State-University Partnership Learning Network (SUPLN) Web Conference

Findings from the CHIPRA Quality Demonstration Grant Program

Slide 1

AcademyHealth State-University Partnership Learning Network (SUPLN) Web Conference

Findings from the CHIPRA Quality Demonstration Grant Program

September 17, 2015

Slide 2

Teleconference Instructions

Please make sure your computer is linked to your phone:

- A box should appear on your screen when you log-in with dial-in instructions.

OR

- Click on the phone symbol in the toolbar at the top of your screen. Enter your phone number and click "join," the system will call you directly.

OR

- Call in directly:

- Dial 1-866-244-8528

- Enter the access code 602144 and press pound (#)

- Once you have dialed in, click on the information symbol in top right corner of the meeting room and dial the "Telephone Token" number into your phone.

- The system will link your phone with your computer.

Slide 3

View the Slides in Full Screen

- If you would like to view the slides in full screen, click the four way arrow button on the top right corner of the slides.

Slide 4

Download Slide Deck and Materials

- If you would like to download the slide deck and materials for this presentation, click the "Download File(s)" button in the box marked "Download Slides" in the lower left-hand corner.

Slide 5

Technical Assistance

- If you have technical questions during the event, please type them into the chat box in the lower left-hand corner of the screen.

- Live technical assistance is also available:

- Please call Adobe Connect at (800) 422-3623

Slide 6

Discussion

To raise your hand:

- Participants can use the hand raise button at the top of the screen to signal to the presenter that they would to speak

To submit a question:

- Click in the chat box on the left side of your screen

- Type your question into the dialog box and click the Send button

Please mute your phones if you are not speaking to reduce background noise.

Slide 7

AcademyHealth Staff

- Enrique Martinez-Vidal, Vice President State Policy and Technical Assistance

- Alyssa Walen, Senior Manager

- Stephanie Kennedy, Research Assistant

Slide 8

Agenda

- Welcome and Introduction

- Core Set of Children's Health Care Quality Measures for Medicaid and CHIP: Lessons from the CHIPRA Quality Demonstration Grant Program

- Anna Christensen, Ph.D., Senior Health Researcher, Mathematica Policy Research

- SC Medicaid-USC Partnership: Implementing CHIPRA Core Measures in South Carolina

- Kathy Mayfield Smith, MA, MBA, Associate Director, Medicaid Policy Research, USC Institute for Families in Society

- Implementing Child Health Measures at the State and Practice-level: Lessons Learned through Maine's Improving Health Outcomes for Children CHIPRA Quality Demonstration Grant

- Kimberley Fox, MPA, Senior Research Associate, Cutler Institute for Health and Social Policy, Muskie School of Public Service, University of Southern Maine

- Elizabeth Hill, Centers for Medicare and Medicaid Services

- Q+A and Discussion

Slide 9

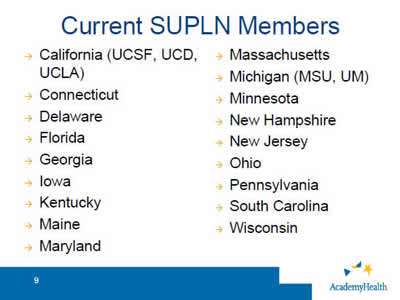

Current SUPLN Members

- California (UCSF, UCD, UCLA)

- Connecticut

- Delaware

- Florida

- Georgia

- Iowa

- Kentucky

- Maine

- Maryland

- Massachusetts

- Michigan (MSU, UM)

- Minnesota

- New Hampshire

- New Jersey

- Ohio

- Pennsylvania

- South Carolina

- Wisconsin

Slide 10

Core Set of Children's Health Care Quality Measures for Medicaid and CHIP: Lessons from the CHIPRA Quality Demonstration Grant Program

Presentation to the State-University Partnership Learning Network

September 17, 2015

Anna L. Christensen, Ph.D., Senior Health Researcher, Mathematica Policy Research

Slide 11

Agenda

- Background on the CHIPRA Quality Demonstration Grants and the CMS Child Core Set

- Evaluation Findings and Lessons Learned from the CHIPRA Quality Demonstration Grant Program

- How are Demonstration States Using the Child Core Set Measures to Improve Quality?

Slide 12

Background on the CHIPRA Quality Demonstration Grants and the CMS Child Core Set

Slide 13

CHIPRA Quality Demonstration Grants

- Congressionally mandated by the Children's Health Insurance Program Reauthorization Act of 2009 (CHIPRA)

- $100 million program

- One of the largest federally funded efforts to focus on health care for children

- Five-year grants awarded by CMS

- 10 grants, including multi-State partnerships (18 States total)

- February 2010–February 2015, with some extensions

- $9 to $11 million per grantee

- National evaluation

- CMS funding, AHRQ oversight

- August 2010–September 2015

- Mathematica, Urban Institute, AcademyHealth

Slide 14

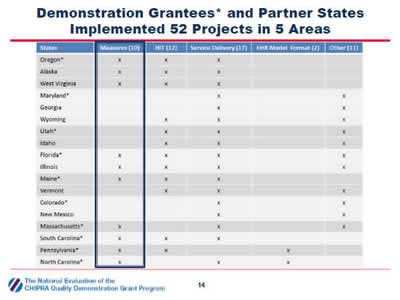

Demonstration Grantees* and Partner States Implemented 52 Projects in 5 Areas

Chart shows the projects implemented by 18 States (the 10 CHIPRA demonstration grantee States and their partners) in rows across five topic areas: measures, health information technology, service delivery, EHR model format, and other.

Slide 15

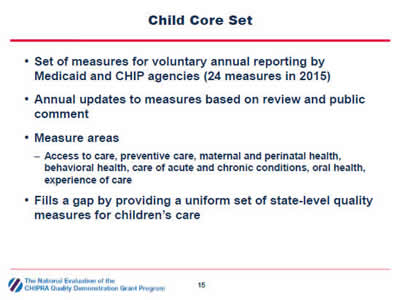

Child Core Set

- Set of measures for voluntary annual reporting by Medicaid and CHIP agencies (24 measures in 2015)

- Annual updates to measures based on review and public comment

- Measure areas

- Access to care, preventive care, maternal and perinatal health, behavioral health, care of acute and chronic conditions, oral health, experience of care

- Fills a gap by providing a uniform set of state-level quality measures for children's care

Slide 16

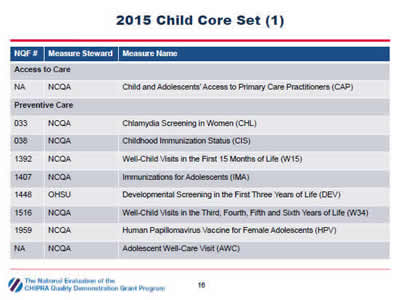

2015 Child Core Set (1)

| NQF # | Measure Steward | Measure Name |

|---|---|---|

| Access to Care | ||

| NA | NCQA | Child and Adolescents' Access to Primary Care Practitioners (CAP) |

| Preventive Care | ||

| 033 | NCQA | Chlamydia Screening in Women (CHL) |

| 038 | NCQA | Childhood Immunization Status (CIS) |

| 1392 | NCQA | Well-Child Visits in the First 15 Months of Life (W15) |

| 1407 | NCQA | Immunizations for Adolescents (IMA) |

| 1448 | OHSU | Developmental Screening in the First Three Years of Life (DEV) |

| 1516 | NCQA | Well-Child Visits in the Third, Fourth, Fifth and Sixth Years of Life (W34) |

| 1959 | NCQA | Human Papillomavirus Vaccine for Female Adolescents (HPV) |

| NA | NCQA | Adolescent Well-Care Visit (AWC) |

Table lists the 24 measures in the 2015 Child Core Set, as well as their NQF number (if endorsed) and measure steward. Measures are grouped by category.

Slide 17

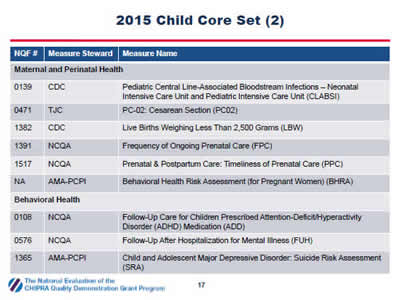

2015 Child Core Set (2)

| NQF # | Measure Steward | Measure Name |

|---|---|---|

| Maternal and Perinatal Health | ||

| 0139 | CDC | Pediatric Central Line-Associated Bloodstream Infections – Neonatal Intensive Care Unit and Pediatric Intensive Care Unit (CLABSI) |

| 0471 | TJC | PC-02: Cesarean Section (PC02) |

| 1382 | CDC | Live Births Weighing Less Than 2,500 Grams (LBW) |

| 1391 | NCQA | Frequency of Ongoing Prenatal Care (FPC) |

| 1517 | NCQA | Prenatal & Postpartum Care: Timeliness of Prenatal Care (PPC) |

| NA | AMA-PCPI | Behavioral Health Risk Assessment (for Pregnant Women) (BHRA) |

| Behavioral Health | ||

| 0108 | NCQA | Follow-Up Care for Children Prescribed Attention-Deficit/Hyperactivity Disorder (ADHD) Medication (ADD) |

| 0576 | NCQA | Follow-Up After Hospitalization for Mental Illness (FUH) |

| 1365 | AMA-PCPI | Child and Adolescent Major Depressive Disorder: Suicide Risk Assessment (SRA) |

Table continued from last page, listing the 24 measures in the 2015 Child Core Set.

Slide 18

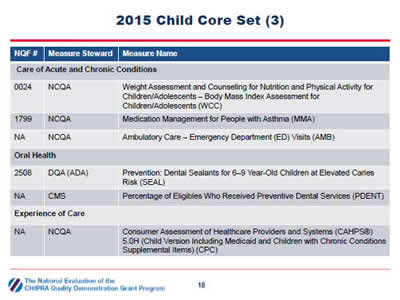

2015 Child Core Set (3)

| NQF # | Measure Steward | Measure Name |

|---|---|---|

| Care of Acute and Chronic Conditions | ||

| 0024 | NCQA | Weight Assessment and Counseling for Nutrition and Physical Activity for Children/Adolescents – Body Mass Index Assessment for Children/Adolescents (WCC) |

| 1799 | NCQA | Medication Management for People with Asthma (MMA) |

| NA | NCQA | Ambulatory Care – Emergency Department (ED) Visits (AMB) |

| Oral Health | ||

| 2508 | DQA (ADA) | Prevention: Dental Sealants for 6–9 Year-Old Children at Elevated Caries Risk (SEAL) |

| NA | CMS | Percentage of Eligibles Who Received Preventive Dental Services (PDENT) |

| Experience of Care | ||

| NA | NCQA | Consumer Assessment of Healthcare Providers and Systems (CAHPS®) 5.0H (Child Version Including Medicaid and Children with Chronic Conditions Supplemental Items) (CPC) |

Table continued from last page, listing the 24 acute and chronic care measures in the 2015 Child Core Set. The experience of care measure is the Consumer Assessment of Healthcare Providers and Systems (CAHPS®) 5.0H (Child Version Including Medicaid and Children with Chronic Conditions Supplemental Items).

Slide 19

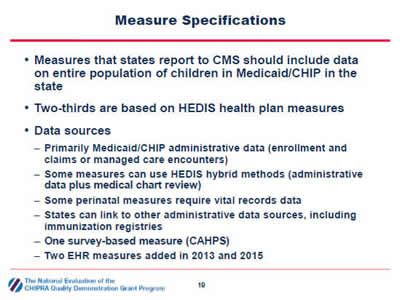

Measure Specifications

- Measures that states report to CMS should include data on entire population of children in Medicaid/CHIP in the state

- Two-thirds are based on HEDIS health plan measures

- Data sources

- Primarily Medicaid/CHIP administrative data (enrollment and claims or managed care encounters)

- Some measures can use HEDIS hybrid methods (administrative data plus medical chart review)

- Some perinatal measures require vital records data

- States can link to other administrative data sources, including immunization registries

- One survey-based measure (CAHPS)

- Two EHR measures added in 2013 and 2015

Slide 20

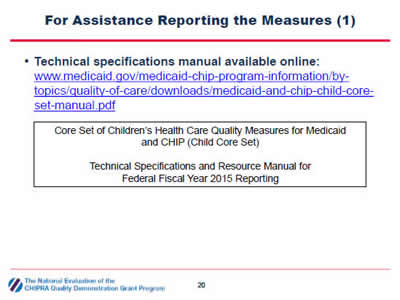

For Assistance Reporting the Measures (1)

- Technical specifications manual available online: http://www.medicaid.gov/medicaid-chip-program-information/by-topics/quality-of-care/downloads/medicaid-and-chip-child-core-set-manual.pdf

Core Set of Children's Health Care Quality Measures for Medicaid and CHIP (Child Core Set)

Technical Specifications and Resource Manual for Federal Fiscal Year 2015 Reporting

Slide 21

For Assistance Reporting the Measures (2)

- Medicaid/CHIP Health Care Quality Measures Technical Assistance (TA) and Analytic Support Program

- Established by CMS in 2011 as a capacity-building program

- TA available to all states via:

- Resource manuals

- Email helpdesk

- Webinars

- Issue briefs

- In-person quality conferences

Slide 22

For Assistance Reporting the Measures (3)

Image: Screen shot of the September 2014 Medicaid/CHIP Fact Sheet.

Slide 23

For Measure Results

- Child Core Set measures are publicly reported annually by HHS http://www.medicaid.gov/medicaid-chip-programinformation/bytopics/quality-ofcare/downloads/2014-child-sec-rept.pdf.

Image: Cover of the HHS 2014 Annual Report on the Quality of Care for Children in Medicaid and CHIP.

Slide 24

Evaluation Findings and Lessons Learned from the CHIPRA Quality Demonstration Grant Program

Slide 25

Measure-Focused Demonstration States

- 10 states focused on calculating the Child Core Set and on using the measures for quality improvement

- Several states partnered with universities

Slide 26

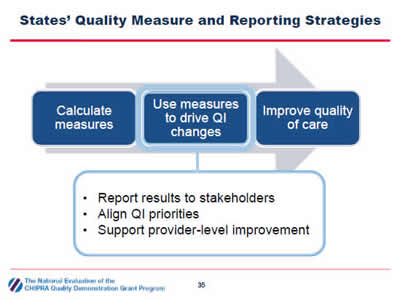

Measure-Focused Demonstration Activities

- Calculate measures.

- Use measure to drive QI changes.

- Improve quality of care.

The figure shows the three types of measure-focused activities that demonstration states participated in. They are to calculate measures, use measures to drive QI changes, and improve quality of care. The box that says "calculate measures" is highlighted to indicate a focus on that activity.

Slide 27

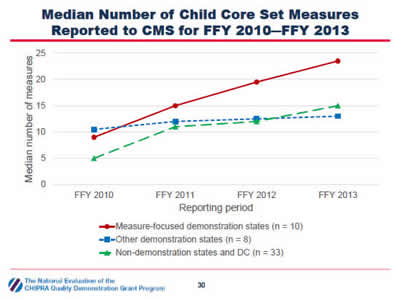

Median Number of Child Core Set Measures Reported to CMS for FFY 2010―FFY 2013

Line graph displays the median number of core child health measures reported on the Y axis. It compares the 10 CHIPRA demonstration States with measure projects, the other 8 demonstration States, and the 33 non-demonstration States and the District of Columbia for Federal fiscal years (FFY) 2010, 2011, 2012, and 2013 displayed on the X axis. Measures-focused States gradually increased the median number of measures reported from 9 in FFY 2010 to 15 in FFY 2011, 19.5 in FFY 2012, and 23.5 in FFY 2013.

Slide 28

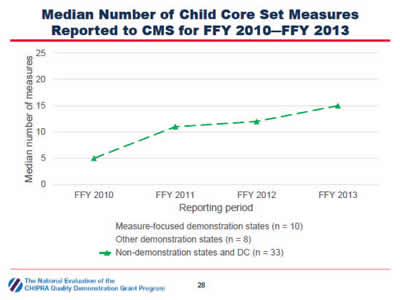

Median Number of Child Core Set Measures Reported to CMS for FFY 2010―FFY 2013

Line graph displays the median number of core child health measures reported on the Y axis. It compares the 10 CHIPRA demonstration States with measure projects, the other 8 demonstration States, and the 33 non-demonstration States and the District of Columbia for Federal fiscal years (FFY) 2010, 2011, 2012, and 2013 displayed on the X axis. Measures-focused States gradually increased the median number of measures reported from 9 in FFY 2010 to 15 in FFY 2011, 19.5 in FFY 2012, and 23.5 in FFY 2013.

Slide 29

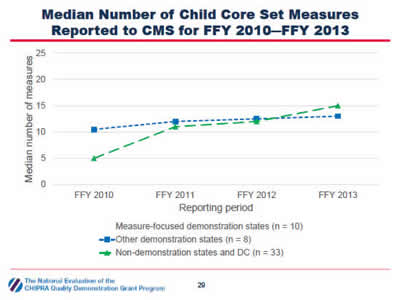

Median Number of Child Core Set Measures Reported to CMS for FFY 2010―FFY 2013

Line graph displays the median number of core child health measures reported on the Y axis. It compares the 10 CHIPRA demonstration States with measure projects, the other 8 demonstration States, and the 33 non-demonstration States and the District of Columbia for Federal fiscal years (FFY) 2010, 2011, 2012, and 2013 displayed on the X axis. Measures-focused States gradually increased the median number of measures reported from 9 in FFY 2010 to 15 in FFY 2011, 19.5 in FFY 2012, and 23.5 in FFY 2013.

Slide 30

Median Number of Child Core Set Measures Reported to CMS for FFY 2010―FFY 2013

Line graph displays the median number of core child health measures reported on the Y axis. It compares the 10 CHIPRA demonstration States with measure projects, the other 8 demonstration States, and the 33 non-demonstration States and the District of Columbia for Federal fiscal years (FFY) 2010, 2011, 2012, and 2013 displayed on the X axis. Measures-focused States gradually increased the median number of measures reported from 9 in FFY 2010 to 15 in FFY 2011, 19.5 in FFY 2012, and 23.5 in FFY 2013.

Slide 31

Select Grant-Funded Activities to Expand the Reporting of Measures

- Hiring dedicated measure programmers

- Working across state agencies to link data

- Contracting with Medicaid managed care plans and external quality review organizations (EQROs) to support measure reporting

- Partnering with universities

- Fielding CAHPS survey more systematically

- Developing standard testing procedures to ensure measure accuracy

Slide 32

Challenges

- Combining data from different programs/sources

- Medicaid FFS, Medicaid MCOs, CHIP (if separate CHIP agency)

- Linking state data sources

- e.g., vital records data, state immunization registry

- Reporting measures from EHRs

- Adapting state-level measures to the practice-level for quality improvement activities

Slide 33

Key Take Aways

- Calculating the measures took more time and resources than states anticipated

- Some measures were more challenging than others

But…

- States can overcome many of the challenges to reporting the Child Core Set measures if they invest in data quality and reporting systems, identify staff or contractors who have expertise in quality measurement, and make use of TA and financial support

Slide 34

How are Demonstration States Using the Child Core Set Measures to Improve Quality?

Slide 35

States' Quality Measure and Reporting Strategies

Calculate measures

↓

Use measures to drive QI changes:

- Report results to stakeholders

- Align QI priorities

- Support provider-level improvement

↓

Improve quality of care

The figure shows the three types of measure-focused activities that demonstration states participated in. They are to calculate measures, use measures to drive QI changes, and improve quality of care. The box that says "use measures to drive QI changes" is highlighted to indicate focus on that activity. A box it below lists three types of activities demonstration states did to use measures to drive QI. They include: report results to stakeholders, align QI priorities, and support provider-level improvement.

Slide 36

Reporting Results to Stakeholders

- Goals

- Document and be transparent about performance

- Allow comparisons across states, regions, and health plans

- Identify QI priorities and track improvement over time

- CHIPRA state strategies

- Produce reports from existing data (Medicaid claims, immunization registries)

- Develop reports for different stakeholders: policymakers, health plans, providers, and the public

Slide 37

Aligning Measures and QI Priorities

- Goals

- Foster system-level reflection

- Set the stage for collective action

- Create a powerful incentive for providers to improve care

- CHIPRA state strategies

- Formed multi-stakeholder quality improvement workgroups

- Encouraged consistent quality reporting standards across programs

- Required managed care organizations to meet quality benchmarks

Slide 38

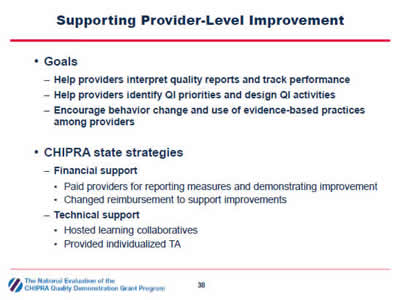

Supporting Provider-Level Improvement

- Goals

- Help providers interpret quality reports and track performance

- Help providers identify QI priorities and design QI activities

- Encourage behavior change and use of evidence-based practices among providers

- CHIPRA state strategies

- Financial support

- Paid providers for reporting measures and demonstrating improvement

- Changed reimbursement to support improvements

- Technical support

- Hosted learning collaboratives

- Provided individualized TA

- Financial support

Slide 39

For More Evaluation Results

- View evaluation highlights and other materials on the evaluation webpage: http://www.ahrq.gov/policymakers/chipra/demoeval/index.html

Slide 40

SC Medicaid-USC Partnership: Implementing CHIPRA Core Measures in South Carolina

Presented by Kathy Mayfield Smith, MA, MBA

Associate Director, Medicaid Policy Research

USC Institute for Families in Society

September 17, 2015

Slide 41

State–University Partnership Continuous since 1996

Images: Logos of the University of South Carolina and the Health Connections Medicaid program.

Slide 42

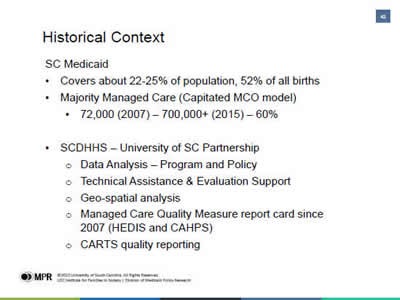

Historical Context

SC Medicaid

- Covers about 22-25% of population, 52% of all births

- Majority Managed Care (Capitated MCO model)

- 72,000 (2007) – 700,000+ (2015) – 60%

- SCDHHS – University of SC Partnership

- Data Analysis – Program and Policy

- Technical Assistance & Evaluation Support

- Geo-spatial analysis

- Managed Care Quality Measure report card since 2007 (HEDIS and CAHPS)

- CARTS quality reporting

Slide 43

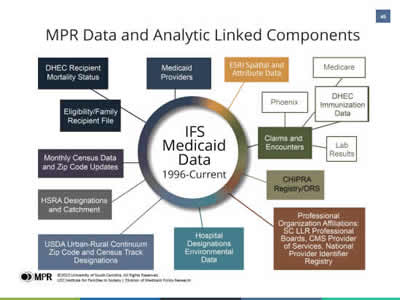

MPR data and Analytic Linked Components

The figures shows the data processed by the Institute for Families in Society's Division of Medicaid Policy Research (MPR). In the center of the figure is IFS Medicaid Data, 1996-current. Various data components come out of the center, like spokes on a wheel.

Slide 44

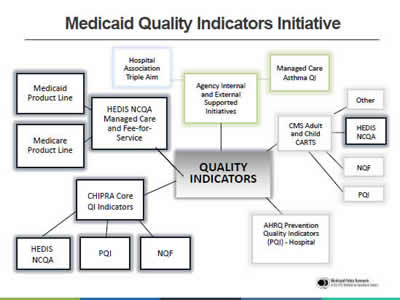

Medicaid Quality Indicators Initiative

Image: This figure shows the quality measures that are included in the Medicaid Quality Indicators Initiative. They include: CHIPRA Core QI Indicators (HEDIS NCQA, PQI, and NQF), HEDIS NCQA Managed Care and Fee for Service measures (both Medicaid and Medicare product lines), Agency Internal and External Supported Initiatives (including Hospital Associated Triple Aim, and Managed Care Asthma QI), CMS Adult and Child CARTS measures (HEDIS NCQA, PQI, NQF, and other), and AHRQ Prevention Quality Indicators (PQI), hospital level.

Slide 45

Leveraged Partnership USC/IFS Role in Demonstration Grant

- Collaboration to conceptualize and write the grant

- Technical assistance and evaluation for state

- Collect - report on all CHIPRA Core measures (including CAHPS)

- Compare practices to matched comparison practices, total CHIPRA, Total MCO and Total State

- Support and participate in Learning Collaborative

- Technical assistance with practices

Slide 46

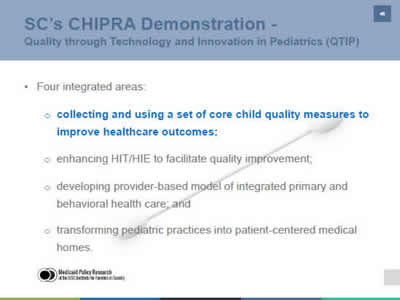

SC's CHIPRA Demonstration - Quality through Technology and Innovation in Pediatrics (QTIP)

- Four integrated areas:

- Collecting and using a set of core child quality measures to improve healthcare outcomes;

- Enhancing HIT/HIE to facilitate quality improvement;

- Developing provider-based model of integrated primary and behavioral health care; and

- Transforming pediatric practices into patient-centered medical homes.

Slide 47

SC's CHIPRA Demonstration - Quality through Technology and Innovation in Pediatrics (QTIP)

- 18 Child serving practices (4 years)

- Types: Private, FQHC/RHC, Academic

- Large/Med/Small - # clinicians – patient population

- 5% of pediatric practices serve over 20% of all children in Medicaid

- Rural/Urban (7% of all Urban, 2% of Suburban, 3% of all Rural)

- 15 comparison practices were matched on all characteristics

- No academic comparison practices

Slide 48

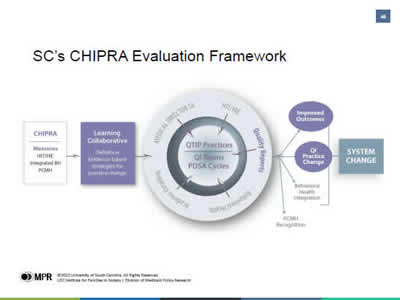

SC's CHIPRA Evaluation Framework

Image: This figure shows South Carolina's CHIPRA evaluation framework leading from CHIPRA measures to quality improvement and practice change and ultimately to system change.

Slide 49

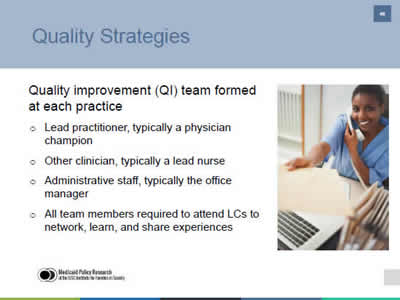

Quality Strategies

Quality improvement (QI) team formed at each practice

- Lead practitioner, typically a physician champion

- Other clinician, typically a lead nurse

- Administrative staff, typically the office manager

- All team members required to attend LCs to network, learn, and share experiences

Slide 50

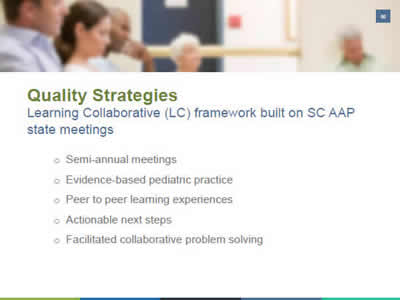

Quality Strategies

Learning Collaborative (LC) framework built on SC AAP state meetings

- Semi-annual meetings

- Evidence-based pediatric practice

- Peer to peer learning experiences

- Actionable next steps

- Facilitated collaborative problem solving

Slide 51

Core Measure Quality Strategies

All 24 Child Core measures

- 2-5 measures introduced by subject matter experts

- Targeted work on at least one new measure

Plan, Do, Study, Act Cycles

On-site TA after LC to reinforce learning and QI skill building

Quality Improvement Reports - practice level

- Administrative claims and encounter data

- Compared to comparison practice, total QTIP, Child State Medicaid

Slide 52

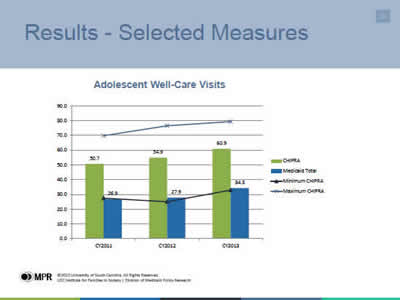

Results - Selected Measures

Adolescent Well-Care Visits

Image:This figure is a bar graph showing outcomes for the adolescent well care visit measure for CY2011, CY2012, and CY2013, for the CHIPRA practices compared to Medicaid as a whole. CHIPRA practices had higher rates of adolescent well care visits than Medicaid overall in each year. CHIPRA practices improved on this measure over time, going from 50.7 percent in 2011, to 54.9 percent in 2012, to 60.9 percent in 2013. Measure performance also improved over time in Medicaid as a whole, going from 26.9 percent in 2011, to 27.9 percent in 2012, to 34.3 percent in 2013.

Slide 53

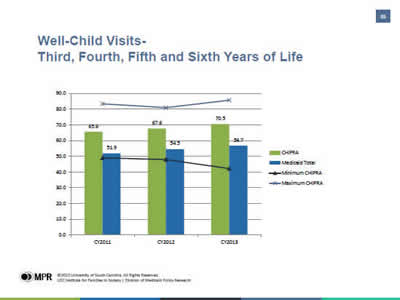

Well-Child Visits- Third, Fourth, Fifth and Sixth Years of Life

Image: This figure is a bar graph showing outcomes for the measure of Well-Child Visits- Third, Fourth, Fifth and Sixth Years of Life, for CY2011, CY2012, and CY2013, for the CHIPRA practices compared to Medicaid as a whole. CHIPRA practices had higher performance on this measure than Medicaid overall in each year. CHIPRA practices improved gradually on this measure over time, going from 65.6 percent in 2011, to 67.6 percent in 2012, to 70.5 percent in 2013. Measure performance also improved gradually over time in Medicaid as a whole, going from 51.9 percent in 2011, to 54.5 percent in 2012, to 56.7 percent in 2013.

Slide 54

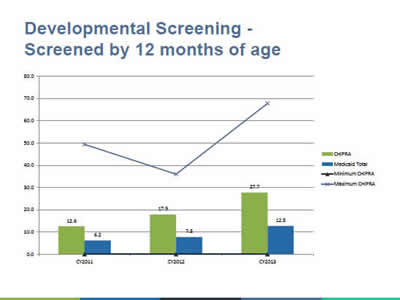

Developmental Screening - Screened by 12 months of age

Image: This figure is a bar graph showing outcomes for the measure of Developmental Screening - Screened by 12 months of age, for CY2011, CY2012, and CY2013, for the CHIPRA practices compared to Medicaid as a whole. CHIPRA practices had higher performance on this measure than Medicaid overall in each year. CHIPRA practices improved on this measure over time, going from 12.6 percent in 2011, to 17.9 percent in 2012, to 27.7 percent in 2013. Measure performance also improved gradually over time in Medicaid as a whole, going from 6.2 percent in 2011, to 7.8 percent in 2012, to 12.8 percent in 2013.

Slide 55

Lessons Learned

Practice performance drives state performance

TA and QI tools needed for data-driven quality improvement

Continued QI effort critical to sustained high performance

QI Team critical to practice change and improved performance

- Workflow changes, staff empowerment

- Communication of changes to ensure follow-through

Barriers between physician/practice coding and MCO/State impact performance measures

- Practice workflow (staff strip quality codes traditionally not paid)

- Hospital coding practices (no more than 4)

System changes required at state and practice levels

Slide 56

Successes/Outcomes

Improved performance on quality measures

- Intervention practices showed statistically significant improvement over time on 11 measures (e.g., Dental visits, Developmental screening, all Well Care)

- Intervention practices showed statistically significant improvement over comparison on 4 measures (e.g., Weight assessment, Chlamydia screening, developmental screenings)

Demonstrated practice performance drives state performance on quality measures

Slide 57

Successes/Outcomes

Infusion of lessons learned into SCDHHS initiatives and policy changes

- Billing and coding changes to support quality measurement

- MCO incentives/withholds encourage TA with practices

- TA quality initiatives and contracts target quality at practice level

- State Children's quality unit to continue work of QTIP

Slide 58

Get in Touch

- Online

ifs.sc.edu/MPR and schealthviz.sc.edu - Phone

(803) 777-0930 - Email

klmayfie@mpr.sc.edu

Slide 59

Implementing Child Health Measures at the State and Practice-level

Lessons Learned through Maine's Improving Health Outcomes for Children CHIPRA Quality Demonstration Grant

Sept 17, 2015

State University Partnership Network Webinar

Kimberley Fox

Cutler Institute for Health and Social Policy

Muskie School of Public Service, University of Southern Maine

Funding for this work is provided under grant CFDA 93.767 from the U.S. Department of Health and Human Services, Centers for Medicare and Medicaid Services (CMS) authorized by Section 401(d) of the Child Health Insurance Program Reauthorization Act (CHIPRA)

Slide 60

CHIPRA Quality Demonstration grant role of State University Partnership

- Builds off longstanding cooperative agreement between Maine DHHS and University of Southern Maine, Muskie School of Public Service

- Technical assistance and data analytic support using longitudinal data warehouse

- Policy analyses, program development, grant writing support

- Program evaluation and monitoring for Maine's Medicaid program.

- Unique grant requirement rewarding multi-state initiative allowed us to also partner with State of Vermont and University of Vermont.

Slide 61

CHIPRA Quality Demonstration grant Role of State University Partnership (cont)

- Pre-award

- Data warehouse and measurement experience on which to demonstrate expertise, grant application preparation in partnership with Vermont.

- Post-award

- Cross-state grant and program administration in Maine, TA and data analytic support for child health measurement implementation at statewide and practice-level, rapid cycle evaluation.

- Value of cross-state/university partnership

Slide 62

Maine's Improving Health Outcomes for Children (IHOC) Initiative

Collaborate with health systems, pediatric and family practice providers, associations, state programs and consumers to:

- Select and promote a set of child health quality measures.

- Create Maine Child Health Improvement Partnership to identify priorities and advise on child health topics in Maine.

- Build a health information technology infrastructure to support the reporting and use of quality measurement information.

- Transform and standardize the delivery of healthcare services by promoting patient centered medical home principles in child-serving practices.

- Evaluate implementation and provide timely feedback to program and policymakers.

Slide 63

IHOC's Method for Implementing Child Health Measures

- Broad stakeholder engagement to identify/prioritize child health measures and identify gaps in care needing statewide/practice improvement

- Investigate and assess the quality of data sources and feasibility of measure calculation methods.

- Collect and analyze data to inform planning, implementation, and monitoring.

- Identify policy and payment opportunities and guide change required to support child health quality improvement and measurement efforts.

- Evaluate measure implementation to inform planning and assess effectiveness and disseminate results

Slide 64

Alignment with Other Quality Initiatives in Maine

- Maine Patient Centered Medical Home Pilot and MaineCare Health Homes initiative.

- Pathways to Excellence– Public reporting initiative of quality metrics supported by employer, payer and provider coalition.

- Other AAP/Health System/State Child Quality Initiatives (AAP Asthma Collaborative, MaineHealth's From the First Tooth, Let's Go!, Maine Developmental Disabilities Council, ME CDC Autism).

- State Innovation Model grant

Slide 65

Maine's Statewide Child Health Measurement Successes

- Developed IHOC Master List of Pediatric Measures

- IHOC measures adopted/used by other statewide quality initiatives (e.g. PTE, MaineCare Health Homes, SIM, health systems internal QI)

- Expanded number of child health statewide measures on Maine's CHIP Annual Reports to CMS.

- Produced annual "Summary of Pediatric Child Health Measures in Maine" report of CHIPRA and other child health measures.

- Investigated feasibility of using Health Information Exchange and statewide registry for child health measures not captured in claims

Slide 66

Using Child Health Measures for Quality Improvement at Practice-level

- Implemented data-driven QI learning collaborative (First STEPS)

- 28 practices participated collectively serving 37,630 (@30%) MaineCare children

- Provided technical assistance to support state registry modifications and changes to health systems EHRs for generating practice-level IHOC child health measures (e.g. immunizations, oral health risk assessments).

- Guided MaineCare policy change and clarified billing payment to support QI and measurement (e.g. developmental screening and oral health)

Slide 67

Evaluating Success of Implementing Child Health Measures at Practice-Level

- First STEPS Phase I: Raising Immunization Rates & Building a Patient Centered Medical Home (Sept 2011 – April 2012):

- Goal: Within 12 months to increase overall immunization rates by more than 4 percentage points.

- Far exceeded goal during that time period and also continued to improve over more extended period.

Slide 68

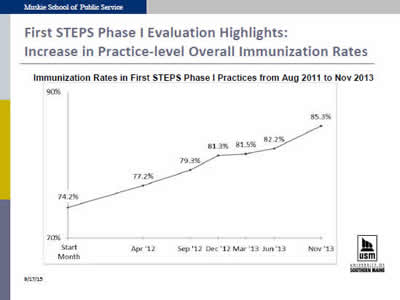

First STEPS Phase I Evaluation Highlights: Increase in Practice-level Overall Immunization Rates

Immunization Rates in First STEPS Phase I Practices from Aug 2011 to Nov 2013

Image: This graph shows the increase in the immunization rate among First STEPS Phase I practices from August 2011 to November 2013. The rate steadily increased over time. It was 74.2 percent in August 2011, 77.2 percent in April 2012, 79.3 percent in September 2012, 81.3 percent in December 2012, 81.5 percent in March 2013, 82.2 percent in June 2013, and 85.3 percent in November 2013.

Slide 69

Percentage Point Change in First STEPS Phase I Practices' Combination and Individual Rates, 8/11 – 9/12

*Significant change in immunization rate before and one year after First STEPS Phase I learning sessions, p<.05.

Image: This graph shows the percent change in 18 different vaccination rates for First STEPS Phase I practices from August 2011 to September 2012. The average increase in vaccination rates was 5.1 percent. All vaccination rates increased except for one: the rotavirus vaccination rate decreased 3.5 percent. Increases ranged from 2.2 percent for human papilloma virus vaccination to 14.9 percent for the 13 year old meningococcal vaccination.

Slide 70

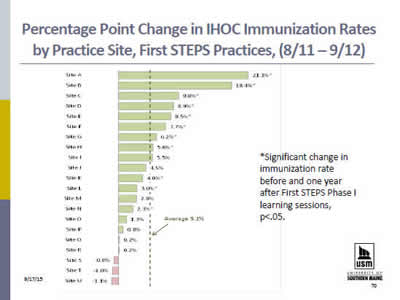

Percentage Point Change in IHOC Immunization Rates by Practice Site, First STEPS Practices, (8/11 – 9/12)

*Significant change in immunization rate before and one year after First STEPS Phase I learning sessions, p<.05.

Image: This graph shows the percent change in IHOC immunization rates for each of the First STEPS Phase I practices from August 2011 to September 2012. On average, practices has a 5.1 percent increase. Among the 21 practices, 18 increased their vaccination rates. Practice-level increases ranged from 0.2 percent to 21.1 percent. Three practices saw decreases in vaccination rates, ranging from a 0.8 percent to a 1.1 percent decrease.

Slide 71

Implementing Practice-level CHIPRA Immunization Rates

Challenges

- Existing registry reporting functions were based on ACIP guidelines (grace periods/age cut-offs) that meet Nat'l CDC measure criteria; reports did not support the calculation of CHIPRA measures.

- Modifying state registry to produce practice-level CHIPRA measures took longer than expected, requiring an interim approach.

- Additional challenges due to not all practices entering dose data consistently for all age groups, or for doses given in the past.

- Challenges in producing statewide CHIPRA rates from registry.

Slide 72

Implementing Practice-level CHIPRA Immunization Rates

Successes

- Increased use of state registry/ accuracy of data reported.

- Monthly practice-level reports helpful in measuring progress toward quality improvement goals.

- Producing registry reports for pediatric practices not in First STEPS to submit rates for public reporting to Pathways to Excellence.

- Changes to registry underway so practices will be able to:

- Produce reports based on CHIPRA measures

- Produce reports according to MaineCare eligibility status

- Produce reports for comparison across affiliated locations.

- Other statewide immunization measures (NIS, ACIP) have improved significantly, which has been attributed to IHOC/First STEPS.

Slide 73

First STEPS Phase II: Developmental Screening Measures

- First STEPS Phase II: Developmental, Autism and Lead Screening (optional anemia screening):

- Monthly data reports based on chart review.

- MaineCare claims.

- Goal for developmental, autism, and lead screening rates:

- Improve the rate of these screenings (according to Bright Futures guidelines) by 50% between May 2012 and December 2012.

Slide 74

Measurement: Developmental Screening

- Challenges with Claims-based measure:

- Extremely (and unexpected) low statewide rates.

- Difficulty identifying specific types of screenings using the 96110 billing code as specified in the measure.

- Policy Response:

- MaineCare clarified and modified the billing method for developmental and autism-specific screenings (and autism testing) for use by primary care providers.

- Clarified existing rate structure for related screenings and tests.

- Added modifiers* to existing billing codes to distinguish between global developmental & autism-specific screening, and follow-up autism testing.

*96110 = global developmental screening

*96110 HI = autism-specific screening

*96111 HK = autism testing

Slide 75

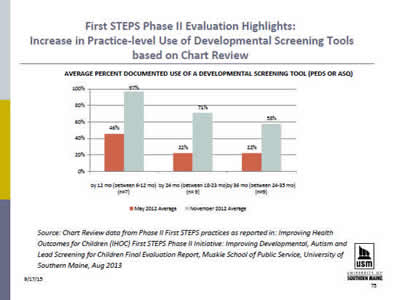

First STEPS Phase II Evaluation Highlights: Increase in Practice-level Use of Developmental Screening Tools based on Chart Review

Average Percent Documented Use of a Developmental Screening Tool (PEDS OR ASQ)

Source: Chart Review data from Phase II First STEPS practices as reported in: Improving Health Outcomes for Children (IHOC) First STEPS Phase II Initiative: Improving Developmental, Autism and Lead Screening for Children Final Evaluation Report, Muskie School of Public Service, University of Southern Maine, Aug 2013

The graph shows the percent of First STEPS Phase II practices that documented use of a developmental screening tool (the PEDS or ASQ), in May of 2012 and in November of 2012. In May of 2012, 46 percent of practices documented use of a screening tool for 6 to 12 month olds, 22 percent documented use for 18 to 23 month olds, and 22 percent documented use for 24 to 35 month olds. By November 2012, more practices were documenting use of developmental screening tools for all age groups. 97 percent documented use for 6 to 12 month olds, 71 percent documented use for 18 to 23 month olds, and 58 percent documented use for 24 to 35 month olds.

Slide 76

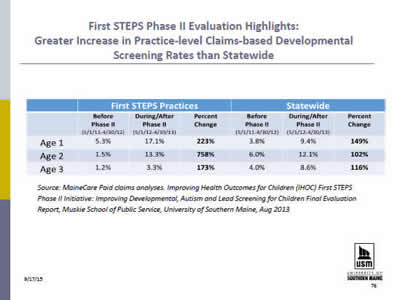

First STEPS Phase II Evaluation Highlights: Greater Increase in Practice-level Claims-based Developmental Screening Rates than Statewide

| First STEPS Practices | Statewide | |||||

|---|---|---|---|---|---|---|

| Before Phase II (5/1/11-4/30/12) | During/After Phase II (5/1/12-4/30/13) | Percent Change | Before Phase II (5/1/11-4/30/12) | During/After Phase II (5/1/12-4/30/13) | Percent Change | |

| Age 1 | 5.3% | 17.1% | 223% | 3.8% | 9.4% | 149% |

| Age 2 | 1.5% | 13.3% | 758% | 6.0% | 12.1% | 102% |

| Age 3 | 1.2% | 3.3% | 173% | 4.0% | 8.6% | 116% |

Source: MaineCare Paid claims analyses. Improving Health Outcomes for Children (IHOC) First STEPS Phase II Initiative: Improving Developmental, Autism and Lead Screening for Children Final Evaluation Report, Muskie School of Public Service, University of Southern Maine, Aug 2013

The table shows the percent increase in developmental screening by age 1, age 2, and age 3, for First STEPS Phase II practices versus statewide for the same time period. There were large increases in developmental screening for all ages, both among First STEPS practices and statewide; however rates were low overall. Among First STEPS practices, developmental screening by age 1 increased from 5.3 percent to 17.1 percent. Statewide the increase went from 3.8 percent to 9.4 percent. Among First STEPS practices, developmental screening by age 2 increased from 1.5 percent to 13.3 percent. Statewide the increase went from 6.0 percent to 12.1 percent. Among First STEPS practices, developmental screening by age 3 increased from 1.2 percent to 3.3 percent. Statewide the increase went from 4.0 percent to 8.6 percent.

Slide 77

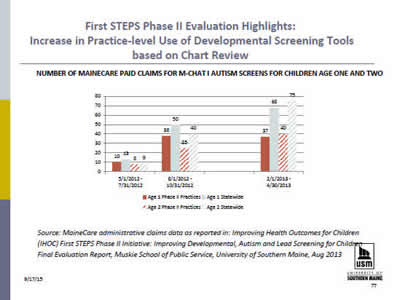

First STEPS Phase II Evaluation Highlights: Increase in Practice-level Use of Developmental Screening Tools based on Chart Review

Number of MaineCare Paid Claims for M-CHAT I Autism Screens for Children Age One and Two

Source: MaineCare administrative claims data as reported in: Improving Health Outcomes for Children (IHOC) First STEPS Phase II Initiative: Improving Developmental, Autism and Lead Screening for Children Final Evaluation Report, Muskie School of Public Service, University of Southern Maine, Aug 2013

The graph shows the number of MaineCare paid claims for MCHAT autism screens for children ages 1 and 2. It compares First STEPS Phase II practices to practices statewide for 3 time periods: May-July 2012, August-October 2012, and February-April 2013. Among First STEPS practices, there were 10, 38, and 37 paid claims for 1 year olds in these time periods, and 8, 25, and 40 paid claims for 2 year olds. In the rest of the state, there were 13, 50, and 68 paid claims for 1 year olds in these time periods, and 9, 40, and 75 paid claims for 2 year olds.

Slide 78

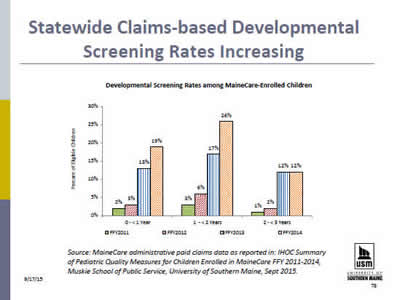

Statewide Claims-based Developmental Screening Rates Increasing

Developmental Screening Rates among MaineCare-Enrolled Children

Source: MaineCare administrative paid claims data as reported in: IHOC Summary of Pediatric Quality Measures for Children Enrolled in MaineCare FFY 2011-2014, Muskie School of Public Service, University of Southern Maine, Sept 2015.

The graph shows the percent of eligible children in MaineCare who received developmental screening by age 1, age 2, and age 3, in FFYs 2011, 2012, 2013, and 2014. Rates increased over time in each age group, with slightly higher screening rates by ages 1 and 2, than by age 3. Screening rates by age 1 increased from 2 percent to 3 percent to 13 percent to 19 percent from 2011 to 2014. Screening rates by age 2 increased from 3-6 percent to 17- 26 percent from 2011 to 2014. Screening rates by age 3 increased from 1-2 percent to 12 percent from 2011 to 2013 and stayed at 12 percent in 2014.

Slide 79

Lessons Learned

- Child health measures need to be actionable and available at the practice-level to improve performance.

- Data source matters - Measures cannot be operationalized without reliable methods for capturing, collecting, calculating, and reporting the data.

- Integrating data system improvements as part of child QI efforts helps increase visibility and accuracy of data and demonstrates how data can be 'meaningfully used' to sustain quality improvement over time.

- Aligning measures across state initiatives is key for provider buy-in and to sustain quality improvement work after grant funding.

Slide 80

Questions or Comments?

For more information:

Please contact: Kimberley Fox, kfox@usm.maine.edu

Or visit the IHOC website:

http://www.maine.gov/dhhs/oms/provider/ihoc.shtml

Slide 81

Questions?

Slide 82

Thank You!

- Please fill out the evaluation questions on screen

- Additional Questions? Contact:

- Alyssa Walen (Alyssa.Walen@AcademyHealth.org)

- Stephanie Kennedy (Stephanie.Kennedy@AcademyHealth.org)