Defining Diagnostic Errors and Diagnostic Performance

Measurement begins with a definition. The recent NASEM report defines diagnostic error as the “failure to establish an accurate and timely explanation of the patient’s health problem(s) or communicate that explanation to the patient.”3 This definition provides three key concepts that need to be operationalized, namely:

- Accurately identifying the explanation (or diagnosis) of the patient’s problem.

- Providing this explanation in a timely manner.

- Effectively communicating the explanation.

Whereas these criteria are clear-cut in some cases (e.g., a patient diagnosed with bronchitis who is having a pulmonary embolus), they are more ambiguous in others, lacking consensus even among experienced clinicians.18,19 Uncertainty in the diagnostic process is ubiquitous and accuracy is often poorly defined, with no widely accepted standards for how long a diagnosis should take for most conditions. Furthermore, patient presentations often evolve over time, and sometimes the best approach is to defer diagnosis or testing to a later time, or to not make a definitive diagnosis until more information is available or if symptoms persist or evolve.20

Diagnostic performance can be defined not only by accuracy and timeliness but also by efficiency (e.g., minimizing resource expenditure and limiting the patient’s exposure to risk).21 Measurement of diagnostic performance should hence consider the broader context of value-based care, including quality, risks, and costs, rather than focus simply on achieving the correct diagnosis in the shortest time.16,22

Understanding the Multifactorial Context of Diagnostic Safety

Diagnostic errors are best conceptualized as events that may be distributed over time and place and shaped by multiple contextual factors and complex dynamics involving system-related, patient-related, team-related, and individual cognitive factors. Availability of clinical data that provide a longitudinal picture across care settings is essential to understanding a patient’s diagnostic journey.

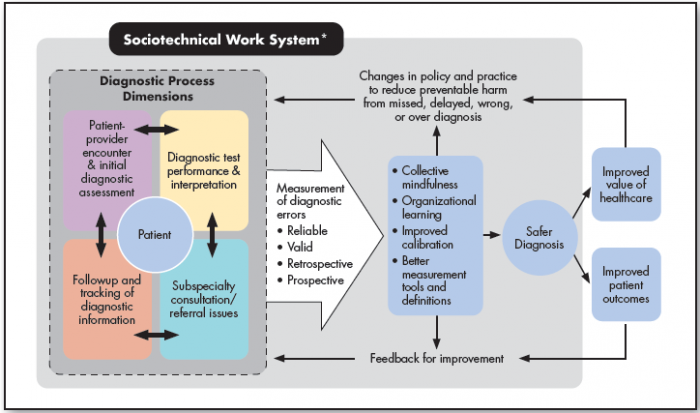

A multidisciplinary framework, the Safer Dx framework14 (Figure 1), has been proposed to help advance the measurement of diagnostic errors. The framework follows the Donabedian Structure-Process-Outcome model,23 which approaches quality improvement in three domains:

- Structure (characteristics of care providers, their tools and resources, and the physical/organizational setting).

- Process (both interpersonal and technical aspects of activities that constitute healthcare).

- Outcome (change in the patient’s health status or behavior).

The recent NASEM report adapted concepts from the Safer Dx framework and other sources to generate a similar framework that emphasizes system-level learning and improvement.3

Measurement must account for all aspects of the diagnostic process as well as the distributed nature of diagnosis, i.e., evolving over time and not limited to what happens during a single clinician-patient visit. The five interactive process dimensions of diagnosis include:

- Patient-clinician encounter (history, physical examination, ordering of tests/referrals based on assessment);

- Performance and interpretation of diagnostic tests.

- Followup and tracking of diagnostic information over time.

- Subspecialty and referral-specific factors.

- Patient-related factors.24

Diagnostic performance is the outcome of these processes within a complex, adaptive sociotechnical system.25,26 Safe diagnosis (as opposed to missed, delayed, or wrong) is an intermediate outcome compared with more distal patient and healthcare delivery outcomes.

Figure 1. Safer Dx Framework

Source: Singh H, Sittig DF. Advancing the science of measurement of diagnostic errors in healthcare: the Safer Dx framework. BMJ Qual Saf 2015;24(2):103-10. doi: 10.1136/bmjqs-2014-003675.

* The sociotechnical work system includes eight technological and nontechnological dimensions, as well as external factors affecting diagnostic performance and measurement, such as payment systems, legal factors, national quality measurement initiatives, accreditation, and other policy and regulatory requirements.

The Safer Dx framework is interactive and acts as a continuous feedback loop. It includes the complex adaptive sociotechnical work system in which diagnosis takes place (structure), the process dimensions in which diagnoses evolve beyond the doctor’s visit (process), and resulting outcomes of “safe diagnosis” (i.e., correct and timely), as well as patient and healthcare outcomes (outcomes). Valid and reliable measurement of diagnostic errors results in collective mindfulness, organizational learning, improved collaboration, and better measurement tools and definitions. In turn, these proximal outcomes enable overall safer diagnosis, which contributes to both improved patient outcomes and value of healthcare. The knowledge created by measurement will also lead to changes in policy and practice to reduce diagnostic errors as well as feedback for improvement.

Understanding these special considerations for measurement is essential to avoid confusion with other care processes (e.g., screening, prevention, management, or treatment). It also keeps diagnostic safety as a concept distinct from other common concerns such as communication breakdowns, readmissions, and care transitions, which may be either contributors or outcomes related to diagnostic errors. The Safer Dx framework underscores that diagnostic errors can emerge across multiple episodes of care and that corresponding clinical data across the care continuum are essential to inform measurement. However, in reality these data are not always available.

Providers and HCOs, often unaware of their patients’ ultimate diagnosis-related outcomes, could benefit from measurement of diagnostic performance, which can then contribute to better system-level understanding of the problem and enable improvement through feedback and learning.27-29 Developing and implementing measurement methods that focus on what matters is consistent with LHS approaches.30 In the absence of robust and accurate measures for diagnostic safety or any current external accountability metric, HCOs should focus on implementing measurement strategies that can lead to actionable data for improvement efforts.31

Choosing Data Sources for Measurement

Measurement must account for real-world clinical practice, taking into consideration not only the individual clinician’s decision making and reasoning but also the influence of systems, team members, and patients on the diagnostic process. As a first step, safety professionals need access to both reliable and valid data sources, ideally across the longitudinal continuum of patient care, as well as pragmatic tools to help measure and address diagnostic error.

Measurement must account for real-world clinical practice, taking into consideration not only the individual clinician’s decision making and reasoning but also the influence of systems, team members, and patients on the diagnostic process.

Many methods have been suggested to study diagnostic errors.32 While most of them have been evaluated in research settings, few HCOs take a systematic approach to measure or monitor diagnostic error in routine clinical care.33 However, with appropriate tools and guidance, all HCOs should be able to adopt systematic approaches to measure and learn about diagnostic safety.

Not all methods to measure diagnostic performance are feasible to implement given limited time and resources. For instance, direct video observations of encounters between clinicians and patients would be excellent, but these are expensive and difficult to sustain on the scale needed for systemwide change.34 In contrast, a more viable alternative for most HCOs is to leverage existing data sources, such as large electronic health record (EHR) data repositories.

EHRs can be useful for measuring both discrete (and perhaps relatively uncommon) events and risks that are systematic, recurrent, or outside clinicians’ awareness. For example, HCOs with the resources to query or mine electronic data repositories have options for both retrospective and prospective measurements of diagnostic safety.35

Even in the absence of standardized methods for measuring diagnostic error, HCOs should measure, learn from, and intervene to address threats to diagnostic safety by following basic measurement principles. For instance, the recently released Salzburg Statement on Moving Measurement Into Action was published through a collaboration between the Salzburg Global Seminar and the Institute for Healthcare Improvement. The statement recognizes the absence of standard and effective safety measures and provides eight broad principles for patient safety measurement. These principles act as “a call to action for all stakeholders in reducing harm, including policymakers, managers and senior leaders, researchers, health care professionals, and patients, families, and communities.”36

In the same spirit, we propose several general assumptions that should guide and facilitate institutional practices for measurement of diagnostic safety and lead to actionable intelligence that identifies opportunities for improvement:

- The underlying motivation for measurement is to learn from errors and improve clinical operations, as opposed to merely responding to external incentives or disincentives.

- Efforts should focus on conditions that have been shown to be relatively common in being missed, which leads to patient harm.

- Measurement should not be used punitively to identify provider failures but rather should be used to uncover system-level problems.37 Information should be used to inform system fixes and related organizational processes and procedures that constitute the sociotechnical context of diagnostic safety.

- Use of a single method to identify diagnostic error, such as manual chart reviews or voluntary reporting, will have limited effectiveness.38,39 For ongoing, widespread monitoring at an organizational level, a combination of methods will have higher yield.

- Hindsight bias, i.e., when knowledge of the outcome significantly affects perception of past events, is inherent in most analysis and will need to be minimized by focusing on how missed opportunities can become learning opportunities.

With this general guidance in mind, we recommend that HCOs begin to monitor diagnostic safety using the most robust data sources currently available. Table 1 describes various data sources and strategies that could enable measurement of diagnostic error, along with their respective strengths and limitations. The following sections describe these data sources and strategies and the evidence to support their use for measurement of diagnostic safety.

Table 1. Data Sources and Related Strategies for Measurement of Diagnostic Safety

| Data Source | What Can Be Learned | Approaches to Operationalizing Data Collection | Approaches to Operationalizing Data Synthesis |

|---|---|---|---|

| Routinely recorded quality and safety events | Awareness of the impact and harm of diagnostic safety events. Emerging patterns that may suggest high-risk situations. |

|

|

| Solicited clinician reports | Understanding of system-related and cognitive factors that affect diagnostic safety. |

|

|

| Solicited patient reports | Understanding of system-related, patient-related, and communication factors that affect diagnostic safety. |

|

|

| Administrative billing data | Detection of diagnostic safety-related patterns and events within a cohort defined by high-risk indicators. | ICD-based indicators applied to administrative datasets to select cohorts for further review (ICD = International Classification of Diseases) | Statistical analysis |

| Medical records (random, selective, and e-trigger enhanced | Detection of diagnostic safety events within a sample of screened or randomly selected records. Insight into vulnerable structures and processes. |

|

|

| Advanced data science methods, including natural language processing of EHR data* | Detection of possible diagnostic safety events (retrospectively or in real time) based on machine-learning algorithms. |

|

|

Table 1, continued

| Data Source | Strengths | Limitations | Examples |

|---|---|---|---|

| Routinely recorded quality and safety events |

|

|

Meeks, et al., 201442 Cifra, et al., 2015113 Gupta, et al., 2017114 Shojania, et al., 2003115 |

| Solicited clinician reports |

|

|

Okafor, et al., 201552 |

| Solicited patient reports |

|

|

Walton, et al., 2017116 Fowler, et al., 2008117 Giardina, et al., 201861 |

| Administrative billing data | Data that are routinely collected and widely accessible. |

|

Newman-Toker, 201864 Mahajan, et al., 202066 |

| Medical records (random, selective, and e-trigger enhanced |

|

|

Bhise, et al., 201770 Murphy, et al., 201478 Singh, et al., 201219 |

| Advanced data science methods, including natural language processing of EHR data* |

|

|

None known |

Note: Approaches marked with an asterisk (*) have potential for future application but are not currently developed for real-time surveillance.